Update: I want to thank everybody that has offered a way to access such a netbook. We now have access to the needed hardware and are trying to fix the bug as soon as possible! Much appreciated.

Do you have a netbook (from around 2011) with AMD processor, please take a look if it is bobcat processor (C-30, C-50, C-60, C-70, E-240, E-300, E-350, E-450). If you have one and are willing to help us giving vpn/ssh access please contact me (hverschore [at] mozilla.com).

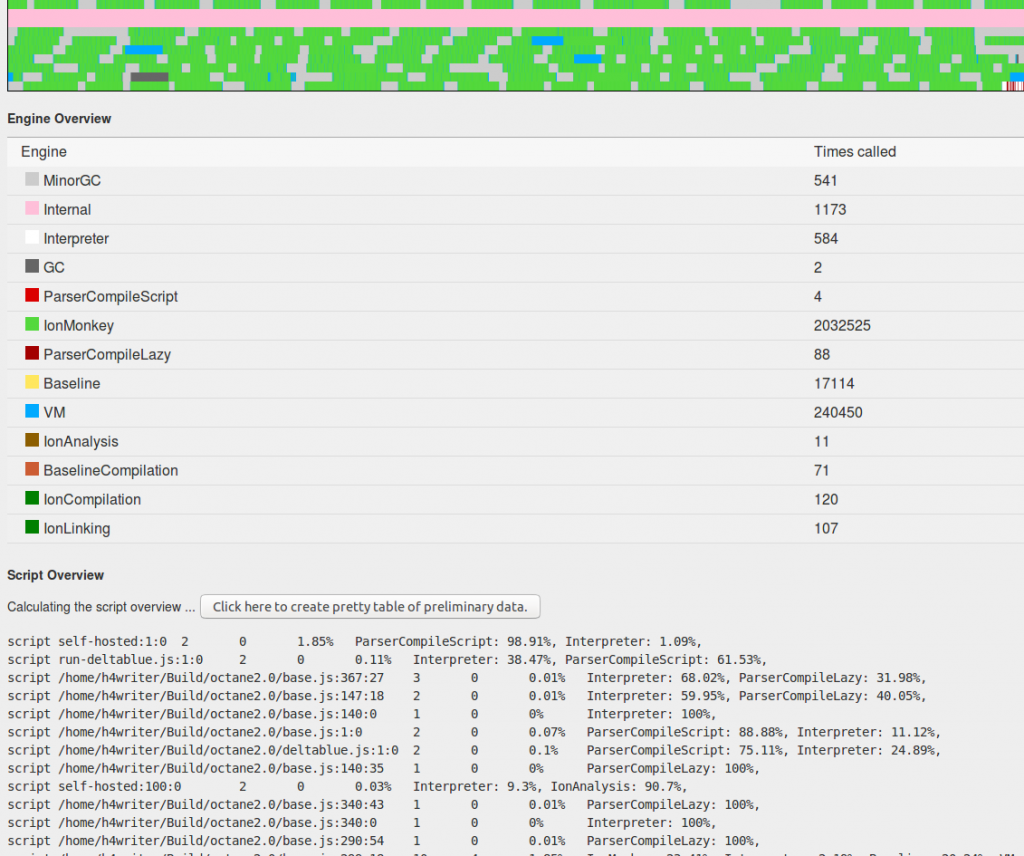

Improving stability and decreasing crash rate is an ongoing issue for all our teams in Mozilla. That is also true for the JS team. We have fuzzers abusing our JS engine, we review each-others code in order to find bugs, we have static analyzers looking at our code, we have best practices, we look at crash-stats trying to fix the underlying bug … Lately we have identified a source of crashes in our JIT engine on specific hardware. But we haven’t been able to find a solution yet.

Our understanding of the bug is quite limited, but we know it is related to the generated code. We have tried to introduce some work-around to fix this issue, but none have worked yet and the turn-around is quite slow. We have to find a possible way to work-around and release that to nightly and wait for crash-stats to see if it could be fixed.

That is the reason for our call for hardware. We don’t have the hardware our-self and having access to the correct hardware would make it possible to test possible fixes much quicker until we find a possible solution. It would help us a lot.

This is the first time our team tries to leverage our community in order to find specific hardware and I hope it works out. We have a backup plan, but we are hoping that somebody reading this could make our live a little bit easier. We would appreciate it a lot if everybody could see if they still have a laptop/netbook with an bobcat AMD processor (C-30, C-50, C-60, C-70, E-240, E-300, E-350, E-450). E.g. this processor was used in the Asus Eee variant with AMD. If you do please contact me at (hverschore [at] mozilla.com) in order to discuss a way to access the laptop for a limited time.